What are the biggest AI ethics issues in 2026 and why should we care?

What are the biggest AI ethics issues in 2026 and why should we care? Learn real risks, laws, bias, privacy, jobs, and deepfakes explained simply for all us

AI ETHICS ISSUES 2026

1/25/20265 min read

What Are AI Ethics Issues in 2026? Why Everyone Is Worried

Artificial Intelligence now writes reports, filters job applications, recommends medical treatments, moderates social media, generates videos, and even influences what news people see. In 2026, AI is no longer a “tech topic”. It is a life topic.

Across America, Europe, Russia, Asia, and Australia, a serious public conversation has begun. Not about what AI can do — but about what AI should be allowed to do.

This is where AI ethics enters the picture.

People are not questioning innovation. They are questioning control, fairness, safety, and responsibility. When machines begin making decisions that affect jobs, health, privacy, and democracy, ethics becomes everyone’s concern.

Watch this quick video for an overview before reading.

🎧 Prefer listening? Play the audio version below.

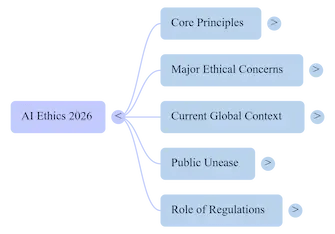

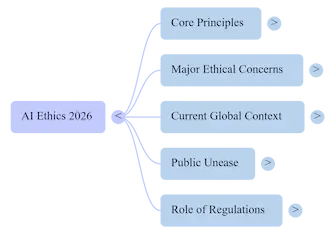

This mind map gives you a quick overview of the concepts covered below.

Meaning of AI Ethics in Simple Words

AI ethics simply means:

Making sure intelligent machines behave in ways that are fair, safe, transparent, and respectful to human rights.

Think of AI as a very intelligent assistant trained on massive amounts of past human data. If that data contains bias, discrimination, or errors, the AI will unknowingly repeat the same mistakes — but at a much larger scale and much faster speed.

AI ethics asks important questions:

Is the system fair to everyone?

Is personal data being used responsibly?

Can humans understand how the AI made a decision?

Who is responsible if something goes wrong?

These are no longer philosophical questions. They are practical issues affecting daily life in 2026.

Why AI Ethics Became a Global Debate in 2026

Over the past two years, AI systems moved from experimental labs into real-world decision-making. This rapid adoption exposed serious gaps.

According to the Stanford AI Index Report 2025, reported incidents involving AI misuse, bias, or safety concerns increased by 38% globally compared to the previous year.

A Pew Research study (2025) found that 62% of adults in the US and Europe are worried that AI systems do not treat people equally.

Meanwhile, the European Commission’s AI Act implementation report (2025) highlighted more than 1,200 cases where AI systems required regulatory review due to ethical or transparency concerns.

These numbers explain why AI ethics is now discussed in classrooms, newsrooms, parliaments, and workplaces.

Real-World Examples That Sparked Public Concern

United States – Recruitment Algorithm Bias

A multinational company deployed an AI hiring tool to screen thousands of CVs. Internal audits later revealed that the system consistently downgraded applications from women for technical roles. The cause was historical training data dominated by male resumes.

Problem: The AI learned past discrimination patterns.

Action Taken: The tool was withdrawn, retrained with balanced datasets, and human review was made mandatory.

European Union – Deepfake During Election Cycle

During a national election campaign, a highly realistic AI-generated video of a political leader circulated on social media. It reached millions before fact-checkers proved it was fake.

Problem: Synthetic media influencing democratic processes.

Action Taken: Enforcement of mandatory labelling for AI-generated media under the EU AI Act.

Russia – Facial Recognition and Public Surveillance

Facial recognition systems were introduced in public spaces for security monitoring. Citizens and digital rights groups raised concerns about constant tracking without consent.

Problem: Tension between security and privacy.

Action Taken: Introduction of transparency mandates on where and how biometric AI can be used.

Australia – AI Misdiagnosis in Healthcare

An AI system used for treatment recommendations showed reduced accuracy for patients from underrepresented ethnic backgrounds because the training data lacked diversity.

Problem: Health risk due to non-inclusive data.

Action Taken: Hospitals enforced human oversight and revised data training standards.

Key Ethical Concerns Businesses Are Facing

Organisations adopting AI quickly are realising that speed without responsibility creates risk. The major concerns include:

Hidden bias in automated decision systems

Misuse of customer data

Lack of explainability in AI outputs

Legal exposure due to new AI regulations

Workforce impact due to automation

As a result, many companies now establish AI ethics committees before launching AI products.

Why Ordinary People Feel Uneasy About AI

Public concern is rooted in everyday experiences:

Seeing realistic fake videos online

Not knowing how social media feeds are controlled

Hearing about jobs being automated

Discovering how much personal data is collected

Learning that AI decisions are often not explainable

People are not afraid of technology. They are uneasy about unregulated power.

How AI Ethics Affects Jobs, Privacy, and Society

AI automation is changing the nature of work. The World Economic Forum Future of Jobs Report 2025 estimates that 83 million jobs may be displaced by automation by 2027, while new roles will require advanced digital skills.

This shift raises ethical questions:

Should employees be informed before automation replaces roles?

Should governments mandate reskilling programmes?

Is profit being prioritised over social responsibility?

Similarly, data privacy concerns are rising as AI systems depend heavily on personal information to function effectively.

Related Reading

To understand how bias in algorithms affected real lives, read:

AI Bias Examples 2026 That Shocked the World

Why AI Regulations Matter for Everyone

AI regulations are designed not to slow innovation, but to protect citizens.

They ensure:

Your personal data is not misused

Decisions affecting you are fair

AI-generated media is identifiable

Organisations remain accountable

Without such frameworks, individuals would have little protection against powerful automated systems.

How This Blog Series Supports Readers

At The TAS Vibe, complex AI topics are translated into practical, easy-to-understand knowledge.

This series will help you:

Recognise ethical risks in AI tools

Understand how AI laws affect daily life

Learn how businesses can adopt AI responsibly

Prepare for an AI-driven future with awareness

Conclusion

AI is transforming the world at extraordinary speed. The question facing society in 2026 is not whether AI should be used, but how it should be governed.

Ethics provides the compass that ensures AI serves humanity rather than harms it.

Understanding AI ethics is now part of being an informed digital citizen.

Author Bio

Agni is an AI educator, independent researcher, and the founder of The TAS Vibe. With years of experience studying how artificial intelligence impacts society, Agni focuses on translating complex AI developments into clear, human-friendly knowledge for global readers. Through research-based writing, practical examples, and educational resources, Agni helps students, professionals, and everyday readers understand the responsible use of AI in modern life.

Disclaimer

This article is intended for educational and informational purposes. It does not constitute legal, technical, or professional advice. Readers should consult official guidelines and experts for specific AI compliance matters.

Follow & Subscribe

Follow The TAS Vibe for simplified, practical insights on AI, ethics, and digital awareness. Subscribe to stay updated with upcoming articles in this series.

Frequently asked questions

What are the major AI ethics concerns today?

Bias, privacy, transparency, misinformation, and accountability.

Why is AI ethics discussed so widely in 2026?

Because AI now influences real-life decisions across jobs, healthcare, media, and governance.

Do AI laws affect ordinary users?

Yes. They are designed to protect personal rights and data.

Can AI be fully ethical?

Only when developed and used with strong human oversight and responsible guidelines.

Comments

Connect

Stay updated with us

Follow

Reach

+91 7044641537

Copyright: © 2026 The TAS Vibe. All rights reserved.