AI Black Box Problem and the Need for Explainability

Discover the AI black box problem, why AI decisions lack transparency, and how explainable AI and algorithmic accountability build trust in 2026 worldwide!!

AI ETHICS ISSUES 2026

The TAS Vibe

1/28/20264 min read

AI Transparency Problems: Why AI Feels Like a Black Box

Artificial Intelligence influences what we see online, how banks assess our creditworthiness, which candidates get interview calls, and even how doctors assess health risks. Yet for most people, AI feels invisible and mysterious. Decisions appear on screens without explanation. This growing discomfort is known as the AI black box problem — where systems are powerful but not easily understood.

Across America, Europe, Russia, Asia and Australia, readers are increasingly searching about AI transparency, algorithmic transparency, and explainable AI because they want clarity. If AI is shaping human lives, people deserve to know how it thinks.

Watch this quick video for an overview before reading.

🎧 Prefer listening? Play the audio version below.

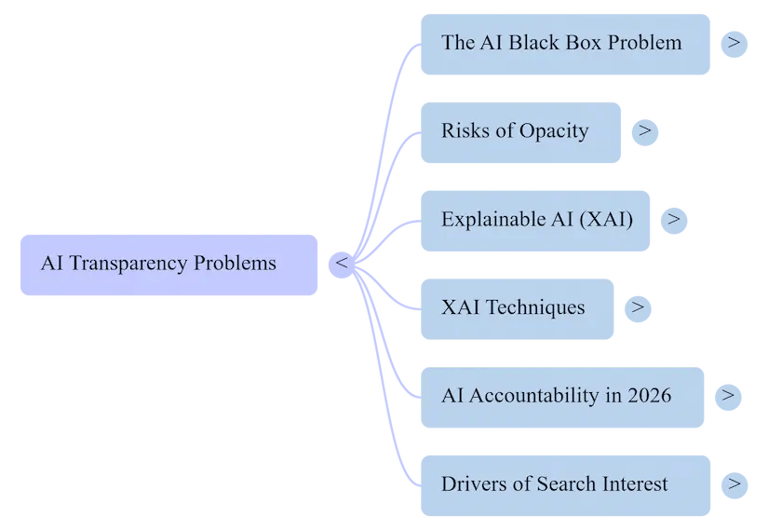

This mind map gives you a quick overview of the concepts covered below.

Why AI Transparency Problems Exist

Traditional software follows clear rules written by programmers. Modern AI does not. It learns from data patterns using deep neural networks with millions of parameters. Even the engineers who build these systems often cannot fully explain how a specific decision was made.

This lack of AI ethics transparency creates real risks:

Hidden bias in training data

No clear reasoning behind automated decisions

Difficulty in auditing AI behaviour

Lack of accountability when harm occurs

A well-known real case involved an AI hiring system that unintentionally downgraded CVs from women because historical company data reflected male-dominant hiring. The organisation had to abandon the system. This incident raised global questions: Why AI is a black box? and How to make AI explainable?

The need for core transparency & explainability became urgent.

Explainable AI in Simple Terms

So, what is explainable AI?

Explainable AI (XAI) means designing systems that can clearly show the reasons behind their decisions. Instead of only giving results, they provide understandable explanations.

For instance:

A black box system says: “Loan rejected.”

An explainable system says: “Loan rejected due to low credit score, unstable income history, and high existing liabilities.”

This is the difference between explainable AI vs black box systems.

Explainable AI Techniques

Several explainable AI techniques are used to improve XAI models transparency:

Feature importance graphs

Decision tree visualisations

Tools such as LIME and SHAP for local explanations

Human-readable rule extraction from complex models

These approaches create transparent machine learning models that humans can trust.

AI Accountability in 2026

By 2026, regulations across the world are pushing for algorithmic transparency. The EU AI Act, GDPR frameworks, and policies in the US, Asia, and Australia emphasise the user’s right to understand automated decisions.

AI accountability in 2026 includes:

Audit trails for AI decisions

Clear documentation of data sources

Adoption of AI ethics transparency practices

Human oversight in high-risk AI systems

Organisations are now required to answer the question: “Why did the AI decide this?”

Real Case Incident — Problem and Solution

A European financial institution faced backlash when customers discovered that its AI loan approval tool rejected applications without explanation. Regulators demanded justification.

Problem: Opaque AI decision system (AI black box)

Solution: Implementation of explainable AI techniques, a dashboard showing decision factors, and a human review panel.

After adopting how to make AI explainable practices, customer trust improved and complaints dropped significantly.

Why These Topics Have Massive Search Interest

Keywords like AI transparency, explainable AI, and AI black box explainability are trending because:

Public concern over AI fairness and accountability

Corporate and academic focus on XAI research

Legal pressure for algorithmic transparency

Real-world incidents exposing hidden AI bias

People no longer accept “the system decided” as an answer.

Related Reads on The TAS Vibe

To understand this topic deeper, explore these interlinked articles:

And stay tuned for our upcoming article:

AI Surveillance Ethics: Are We Being Watched by Machines?

Disclaimer

This article is intended for educational awareness. AI technologies and global regulations continue to evolve.

Follow & Subscribe to The TAS Vibe

For more practical insights on AI, ethics, and technology, follow The TAS Vibe and stay informed about the future of intelligent systems.

Author Bio – Agni

Agni is a technology writer dedicated to making complex AI topics simple for everyday readers. He focuses on AI transparency, ethics, and responsible innovation. Through The TAS Vibe, he educates global audiences about hidden risks in modern technology. His writing style combines research depth with reader-friendly explanations. Agni believes awareness is the first step towards ethical AI adoption. He regularly explores real-world AI case studies to inform his audience. His mission is to bridge the gap between advanced technology and human understanding. Agni continues to advocate for transparent and fair AI systems worldwide.

Frequently asked questions

What is the AI black box problem?

It describes AI systems that make decisions without revealing how they arrived at them.

What is explainable AI?

Explainable AI provides understandable reasons behind AI outputs.

Why is algorithmic transparency important?

It ensures fairness, builds trust, and meets legal standards.

How can organisations make AI more transparent?

By adopting explainable AI techniques, documentation, and human oversight.

Connect

Stay updated with us

Follow

Reach

+91 7044641537

Copyright: © 2026 The TAS Vibe. All rights reserved.